Whenever I am watching cop shows or the like and someone says, “Does the perp have any priors?” or something similar, all I can think of is, “Oh, he/she was a Bayesian. Cool.”

Month: July 2014

Practice makes not much

I’ve always been deeply suspicious of the “10,000 hours/practice” idea of mastery, because in my experience even if I practice for 10 times as long in something in which I have no talent or ability, like operational math, I will still perform far worse than the naturally-talented who practice much less.

This seems to show that this intuition of mine is correct.

Some people have intrinsic gifts. Some people have intrinsic deficits. I know this is hard for liberals to accept – used to be hard for me to accept, even – but it seems to be the case.

When I realized that no matter how hard I studied, no matter what I did, that I’d never be very good at math, it was actually sort of freeing. It liberated me to concentrate on those things I was and am actually good at without wasting all sorts of time in areas that are essentially useless to me.

As an aside, people who are good at math think, Oh, anyone can learn it! Just takes studying. But this doesn’t appear to be the case. Certain brains are probably predisposed to be good at it, and others not so much.

Luckily or unluckily, I am strangely dichotomous. Math and writing/reading usually are fairly correlated, but not so with me; on standardized tests, I usually score in the bottom 10% or below on mathematical ability and in the top 1/10th of 1 percent (depending on how fine-grained the test is) in reading comprehension, analogy analysis and similar skills.

In other words, if you measure my IQ using more math-based assessments, I generally score in the 50-70 range (which is firmly in the mentally retarded category), and if you use a more verbal-based testing regimen, I break the test, scoring off the charts.

Yeah, but of course there is no such thing as natural talent. That wouldn’t be egalitarian.

Start

When your article starts off with an obvious sophism such as this, why should I even bother to read the rest?

With the disappearance of the desktop computer and the downfall of the desk phone….

The desktop computer is not disappearing. Not going anywhere. In 10 years, there will be nearly as many and used almost as commonly as they are now, at least in the business world.

Selfishly speaking, what I really like about the average person abandoning a real computer is that they will be at a huge disadvantage in the business world to me.

Any monkey can learn to use a tablet in five minutes. But they don’t really help you with productivity except in edge cases (looking at a Powerpoint before a meeting, for example). But being competent and well-versed with a general purpose computer – well, the fewer people who are capable of that, the better for me.

By 2025 or so, I suspect we will go back to the state of users in about 1998 or so – approximately 2-3% competent with any real productivity tool, and the rest incapable of touch typing or being able to use a real work machine.

World has changed

I remember even as recently as five or six years ago, I’d eagerly await software updates, glad of the new features and increased capabilities.

But gradually I’ve learned to dread software updates as these days all they do is to remove features, reduce capabilities and generally make my life worse.

Now a posteriori – that is, from so many observed instances in the wild – I generally try to do anything I can to minimize the amount of upgrades that occur since nothing good will come of it.

But why is modern software so user hostile?

There probably isn’t one reason only. There never is an human affairs. But as I’ve noted before, software can’t help but respond to and reproduce general trends in society. And society right now is much about removing control and self-determination from individuals – with NSA spying, decreased funding for social programs, increased credentialism, etc.

Mozilla’s now-cachetic Firefox is the example I use most, but there are many others.

If a software update happens to a regular user/consumer product these days, I can almost guarantee that it will get worse. (Interestingly, software for enterprise-class products which I also use extensively is still improving.)

I used to be firmly opposed to this, but perhaps there should be some sort of licensing that occurs – similar to a driver’s license test – before one can use a computer, tablet or smart phone.

Would this help? I don’t know. Probably not. But I don’t have any better ideas until someone creates a gene-inserting virus that raises IQ by 30 points.

Gaza

I know it’s for internal politics reasons – the same reason we invaded Iraq – but I can’t really understand what exactly Israel hopes to gain long-term with the Gaza slaughter.

Of course, long-term planning isn’t exactly humanity’s strong suit. As most corporations prove, even large organizations only think a quarter ahead or so.

Why should an entire country be any different?

Testing testing 1… 2… jackboot

I recently set up a test lab of Windows Server 2012 virtual machines for something I’m working on, and I called the group policy for the notional workers “Staff Oppression” to make it seem more like a real company.

Now to add the “Machine reboots unpredictably approximately every five minutes” script….

Bothers

It bothers me that if my partner and I ever started a successful company together, I’d probably automatically get more credit than she does just because I am male.

This despite the fact that we both have really different strengths and weaknesses and in many areas she’s much better, more trained and smarter than me.

Depending on the type of company we started, there’s every chance she’d be the lead and I’d be essentially the assistant – for instance, if we started a programming consultancy with some infrastructure services thrown in.

Average users

This article is a bit misogynistic, but does a good job of showing how interfaces like Australis are actually terrible for average users. Though it is about web interfaces, it applies to any user interface.

We assume everyone knows what a save icon does. My mother used floppy disks for a few years but has undoubtedly forgotten all about their functionality. I see interface after interface that use only icons for actions such as “New document”, “Copy”, and “Delete”. Sometimes I’ll be walking her through some sort of interface over the phone and I’ll tell her to delete a file, only to realize that “Delete” is an icon. I’ll have to tell her at that point to search for some icon that looks like a trash can or an “X” or something. I’ve heard her say something genius after finding it like “Why doesn’t it just say ‘Delete’?”.

Icons are completely antagonistic to the thought process of the average user. (I wish there were a better term than “average user” or “regular user” because in my experience the “average” user is 80 or 90 percent of users.) They simply don’t understand what icons do, even after repeated use, and thus are afraid to click on them for fear of something unexpected occurring.

And when something unexpected does occur – like the old interface being nuked and replaced with cryptic one, à la Australis – regular users are paralyzed and then jump ship (how do you like them mixed metaphors?).

Understand that if you are redesigning a website that has loyal users, drastically changing an interface on them means they have to relearn all of the links and menus, and that will probably drive them away in frustration.

My partner’s mother did not know what the standard play/pause/stop buttons did in Winamp even after having seen them for at least forty years in other contexts. And guess what? This is completely normal. I am sure everyone reading this blog knows what they do. Not one regular user reads this blog, though. That, like the Mozilla devs, is a self-selection issue that leads to poor design.

That smart people are often only smart about the very, very tiny arena that they know something about should be explored more, in all areas. But that’s a much harder problem, I think.

505

It took me a bit, but I knew I recognized the beret flash and unit crest this guy is wearing.

It’s that of the 505th Parachute Infantry Regiment, of the 82nd Airborne Division. But I knew it was a real paratrooper beret the moment I saw it; it’s folded correctly – which civilians never do, as no one ever tells them how to – and the unit crest and beret flash is correct, and it exhibits the proper wear berets get when they are worn daily and also put into pockets (as one does in the army).

Incidentally if you haven’t figured it out, the back colorful portion is called the “beret flash” and the front metallic portion is the “unit crest.”

I served in these units as a paratrooper:

Headquarters and Headquarters Company, 82nd Airborne Division

3rd Battalion of the 504th Infantry Regiment, 82nd ABN DIV (attached)

49th Public Affairs Detachment, 82nd ABN DIV (was attached to the 82nd at the time)

I went on quite a few training missions with the 505th PIR in the course of my army job. Funny to see relics from my past coincidentally out in the world, and out of context.

For comparison this is what my beret flash looked like:

And this is what my unit crest looked like (since we were the head unit, we got the full division’s unit insignia):

Both

Both the left and right like to pretend that evolution never happened to humans, in various ways. That’s interesting. Mental self-protection against atavism, I guess.

Note: not a defense of current terrible evo psych BS.

Progress

I don’t think progressives should completely drop whatever else they are working on and concentrate solely on a universal basic income, but that if it were achieved would probably do more general good in this nation than any other single cause.

We’ve already passed the point of a large proportion of the working-age populating being unable to find jobs – these people are just hidden, lost in the numbers as you aren’t considered “unemployed” unless you are actively looking for work.

Nearly 15 years ago on my old blogs I was routinely made fun of for claiming that in the future, 50% or more of population would be unable to find a full-time job of any kind.

We are nearing that world now. No longer is that idea laughed out of the mainstream as it was then. I’ve watched it move from crackpot to loopy-but-possible to now beginning to overtake and infiltrate mainstream economic thought.

A Universal Basic Income in the future will be the only way to prevent society from complete dissolution. The UBI, or something much like it, is really the only alternative.

Right-o

“There is no such thing as a normal period of history. Normality is a fiction of economic textbooks.”

―Joan Robinson

The future of Firefox

The future of Firefox is probably just a single-window, tabless browser that opens up Facebook when you click on it, and then doesn’t allow you to browse anywhere else.

It will be called Mozilla Facefox and it will ship with version 128, in about six months. 😉

All the turmoil

With all the turmoil in the design of operating systems and applications lately, you’d think there would be more actual improvement.

But there’s not, and there can’t be, and I’ll tell you why.

First, though, let’s take a short look at the history of books.

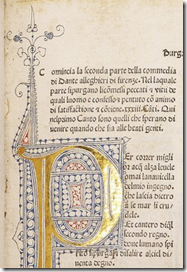

When books first started being printed en masse in the late 1400s and 1500s, they were produced in an extremely wide variety of formats and sizes, with widely-varying designs, with many attempting to imitate high-quality manuscript books.

It wasn’t until the 1600s or so that books really standardized as a format. A book from 1650 wouldn’t be that distinguishable from one today, other than the archaic language and font. The format – margin, one line per page, no columns, etc. – would not be any different.

With operating systems and applications something similar occurred, just in a shorter time frame. The first interfaces were clumsy due to both resource limitations and because the fields of UI and UX were new. Everyone then was in uncharted waters.

After about 20 years of use by the general public, a few very-similar standardized interfaces developed that best melded productivity and approachability. ![[AOL%2520vs%2520Windows%25208%255B4%255D.jpg]](http://lh6.ggpht.com/-CzNeiteWOio/T8jPxpuLcwI/AAAAAAAAJ4s/dvmv4tDFqIs/s1600/AOL%252520vs%252520Windows%2525208%25255B4%25255D.jpg)

Note that these might not have been the absolute best approaches – though how one can measure that is questionable – but they worked well enough for the majority of people. Furthermore, once enough people become accustomed to a use paradigm changing it reduces productivity greatly, and often for a very long time, for only extremely minor benefit.

My point is then that GUI design standardized at a near-optimum with current human and technology limitations around a decade ago, and that designers now attempting to introduce useless “innovations” like Metro and Australis even if their designs are better by some amount serve to actually reduce net productivity.

If something like 3-D interfaces or neural interfaces ever occur, then it would certainly make sense to re-examine and overturn many if not all widely-accepted UI design conventions.

However now all designers really can do is make things worse since interfaces (at least prior to the latest design manias) are already fairly near optimal. Ergo, even slightly net positive changes are harmful as it takes most people many years to completely acclimatize to such major changes with minor benefits.

Autocorrect

What the hell, autocorrect being any good?

I rarely text and hate doing it, but I turned autocorrect off on my phone and have never been happier. Its attempts to autocorrect me were about 10% helpful and 90% utter failures.

I hate any and all predictive features. Perhaps they work for the average user. They never work for me.